Cognitive Exams - General Information

You may also be interested in the How To Apply For A Cognitive Exam or the Cognitive Exam Policies page.

About the National Registry Cognitive Examinations

The National Registry has two examination formats for the cognitive examination: Computer Adaptive Tests (CAT) and Linear examinations. CAT examinations allow shorter, more precise tests individualized to each candidate’s level of knowledge and skills. The National Registry uses the CAT format for Emergency Medical Responder (EMR), Emergency Medical Technician (EMT), and Paramedic (NRP) examinations. A passing standard, identical for all candidates at their level of certification, is used to determine whether a candidate is successful or unsuccessful on the cognitive examination.

Candidates seeking National EMS Certification as an Advanced EMT take linear examinations. A linear CBT exam is a fixed-length, computer version of a paper and pencil exam.

The National Registry uses the same process to develop all test items. First, external subject matter experts draft an item, also known as a test question. Next, the item goes through an extensive review process to ensure:

- Correct responses are correct.

- The item is accurate.

- The question is current.

- The content is clinically relevant.

- Incorrect responses are not partially correct.

This review process includes internal and external subject matter expert review, referencing, and editing.

Items are then pilot tested during live examinations. The National Registry collects enough data from candidate responses to pilot items to estimate each item’s level of difficulty and evaluate the item for evidence of bias. The National Registry does not count responses to piloted items towards the candidate's score. Piloted items that do not meet the National Registry’s strict standards for calibration are revised and re-piloted or discarded. The National Registry places only items that meet rigorous standards as scored questions on the examination.

The difficulty statistic of an item identifies the “ability” necessary to answer an item correctly. The level of ability required to respond to an item correctly may be low, moderate, or high, depending on the estimated difficulty of the test question.

About the Minimum Passing Standard

The minimum passing standard is the level of knowledge or ability that a competent EMS provider must demonstrate to practice safely. The National Registry Board of Directors sets the passing standard and reviews it at least every three years. A recommendation from a panel of experts and providers from the EMS community informs the Board’s actions. Psychometricians, experts in testing, facilitate the panels. The panel uses various recognized methods (such as the Angoff method) to assess how a minimally competent provider would respond to examination items. Panel assessments are combined to form a recommendation on the minimum passing standard for the exam. The Board considers this recommendation and the impact on the community to set the minimum passing standard.

Pilot Questions

During National Registry exams, every candidate receives pilot questions that are indistinguishable from scored items. Examinations do not factor pilot questions into a candidate’s performance. The number of pilot items included on each exam is detailed below:

- EMR: 30 items

- EMT: 10 items

- AEMT: 35 items

- Paramedic: 20 items

Computer Adaptive Tests

CAT examinations are delivered in a different manner than fixed-length exams such as computer-based linear tests and pencil-paper exams and may feel more difficult. Candidates should not be concerned about the ability level of an item on a CAT exam. The examination is scored differently than a fixed-length examination. All items are placed on a standard scale to identify where the candidate falls within the scale. As a result, candidates should answer all items to the best of their ability. Let’s use an example to explain this:

An athlete is trying out to be a high jumper on the track team. Individual jumpers need to jump over a bar placed at four feet above the ground to score points. The bar is the competency standard. Athletes who cannot jump more than three feet during tryouts will rarely-- if ever--score points for their team during a track meet. Athletes who jump four feet on the first day of tryouts, after training and coaching, can not only jump four feet (the minimum) but, later may, through additional education, learn to jump five or more feet. The coach knows that it will be worth the time and effort to coach these athletes to greater heights. Therefore, those who jump over four feet at tryouts become members of the high jump team because they have met the entry-level competency standard.

The coach can hold a tryout to see who meets the entry-level competency standard. The coach will set the bar at 3 feet 6 inches for the first jump attempt. Those who make it over this bar will then progress to 3 feet 9 inches to test their ability. Again, the coach will raise the bar to 4 feet if the athlete is successful at the previous level and have the successful jumpers attempt to clear it. The coach will not tell the team the necessary height to learn the maximum ability of each athlete.

The coach finds that seven of ten athletes clear the bar at the four-foot level. The coach will then raise the bar to 4 feet 3 inches and later to 4 feet 6 inches, increasing the height of the bar until the coach determines the maximum individual ability of each athlete. This process informs the coach about the athletes’ ability based on a standard scale (feet and inches). The coach then sets a standard (4 feet) for membership on the team, based upon his knowledge of what is necessary to score points at track meets (the competency standard).

How a CAT Exam Works

The high-jump analogy can describe the way a CAT exam works. First, the National Registry calibrates every item on a live examination to estimate its difficulty level on a standard scale. Then, the computer adaptive test learns the candidate’s ability level as they take the examination and compares their responses against that scale.

The test begins with an item that is slightly below the passing standard. The item may be from any subject area in the test plan:

- Airway, Respiration & Ventilation

- Cardiology & Resuscitation

- Trauma

- Medical/Obstetrics/Gynecology

- EMS Operations

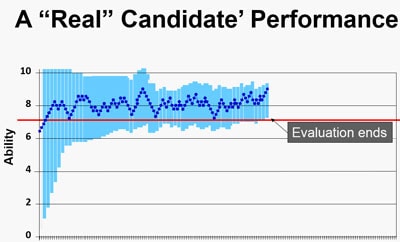

After the candidate gets a short series of items correct, the computer will choose items of a higher ability, perhaps near entry-level competency. The examination selects these items from a variety of content areas of the test plan. If the candidate answers most of these questions correctly, the computer will choose new items at an even higher ability level. Again, if the candidate answers many of these items correctly, the computer will present the candidate with more items of an even higher ability level. Eventually, every candidate will reach their maximum ability level and begin to answer items incorrectly. Thus, the computer evaluates a candidate’s ability level in real-time. The examination ends when there is enough confidence the candidate is above or below the passing standard once the candidate responds to a minimum number of items.

A 95% Confidence is Necessary to Pass or Fail a CAT Exam

The computer stops the exam at the minimum number of items in the following situations:

- There is a 95% confidence the candidate is at or above the passing standard

- There is a 95% confidence the candidate is below the passing standard

- The candidate has reached the maximum allotted time

The length of a CAT exam is variable. A candidate can demonstrate a level of competency in as few as 60 test items. Candidates closer to entry-level competency need to provide the computer with more data to determine with 95% confidence that they are above or below the passing standard. The examination continues to administer items in these cases. Each item provides more information to determine if a candidate meets the passing standard. Test items will vary over the content domains regardless of the length of the examination.

The ability estimate of that candidate is most precise at the maximum length of the examination. The computer needs this level of precision for candidates with abilities close to the passing standard. As in our high-jump analogy, the computer will be able to determine those who jump 3 feet 11 inches from those who jump 4 feet 1 inch. Those who clear 4 feet more times than they miss 4 feet will pass. Those who jump 3 feet 11 inches but fail to clear 4 feet enough times will fail and must repeat the examination.

Some candidates will not be able to jump close to four feet. These candidates are below or well below the entry-level of competency. The examination can rapidly determine these candidates are below the passing standard. Their examinations will also end quickly.

A candidate taking a CAT examination needs to answer every question to the best of their ability. The CAT exam provides precision, efficiency, and confidence that a successful candidate meets the definition of entry-level competency and can be a Nationally Certified EMS provider. An examination attempt is considered unsuccessful if a candidate does not complete the examination in the allotted time.

Linear Examinations

Linear examinations are fixed length examinations. The Advanced-EMT examination is currently a Linear examination.

Candidates cannot skip questions or go back and change their responses. There is no penalty for guessing. An examination attempt is considered unsuccessful if a candidate does not complete the examination in the allotted time.

What does the National Registry include in the examination?

The National Registry develops examinations that measure the essential aspects of out-of-hospital practice. To facilitate this, the National Registry performs a practice analysis to identify the tasks used in clinical care and the knowledge, skills, and abilities needed to perform those tasks. The practice analysis involves evaluating millions of EMS runs generated by thousands of EMS agencies and the input of over 3,500 subject matter experts. Next, psychometricians use this information to develop the test plan, which includes identifying content domains. Finally, subject matter experts create test questions based on the test plan. This process links examination content to EMS practice and establishes content validity. The National EMS Scope of Practice Model, the National EMS Education Standards, and the National Registry Practice Analysis guide content development.

EMS education programs are encouraged to review the current National Registry practice analysis when teaching courses and as a part of the final review of students’ abilities to correctly deliver the tasks necessary for competent patient practice.

Based on the 2014 Practice Analysis, the current National EMS Certification Examinations cover five content areas:

- Airway, Respiration & Ventilation

- Cardiology & Resuscitation

- Trauma

- Medical/Obstetrics/Gynecology

- EMS Operations

All sections, except EMS Operations, have a content distribution of 85% adult and 15% pediatrics.

| Content Area | EMR (90-110 items) |

EMT (70-120 items) |

Advanced EMT (135 items) |

Paramedic (80-150 items) |

| Airway, Respiration & Ventilation | 18%-22% | 18%-22% | 18%-22% | 18%-22% |

| Cardiology & Resuscitation | 20%-24% | 20%-24% | 21%-25% | 22%-26% |

| Trauma | 15%-19% | 14%-18% | 14%-18% | 13%-17% |

| Medical/Obstetrics/Gyn | 27%-31% | 27%-31% | 26%-30% | 25%-29% |

| EMS Ops | 11%-15% | 10%-14% | 11%-15% | 10%-14% |

National EMS Practice Analysis

The goal of licensure and certification is to assure the public that individuals who work in a particular profession have met specific standards and are qualified to engage in EMS care (American Educational Research Association, American Psychological Association, and National Council on Measurement in Education, 1999). The National Registry bases certification and licensure requirements on a candidate’s ability to practice safely and effectively to meet the goal of measuring competency accurately, reliably, and with validity (Kane, 1982). Therefore, the National Registry examination development uses a practice analysis as a critical component in the legally defensible and psychometrically sound credentialing process.

The primary purpose of a practice analysis is to develop a clear and accurate picture of the current practice of a job or profession, in this case, the provision of emergency medical care in the out-of-hospital environment. The results of the practice analysis are used throughout the entire National Registry examination development process to ensure a connection between the examination content and EMS practice. The practice analysis helps answer the questions, “What are the most important aspects of practice?” and “What constitutes safe and effective care?” It also enables the National Registry to develop examinations that reflect the contemporary, real-life practice of out-of-hospital emergency medicine.

The National Registry collects data about EMS practice that identifies tasks, knowledge, skills, and abilities. An analysis of the collected data provides evidence of the frequency and criticality of each identified task. The psychometrics team combines this weighted importance score for each of the five domains. The National Registry then uses the proportion represented by each area in the weighted importance score to set the blueprint for the next five years of National Registry examinations. A copy of the 2014 Practice Analysis is available for free. Download it here.

Example Items

Below are some of the types of questions entry-level providers can expect to answer on the exam:

- A 24-year-old patient fell while skateboarding and has a painful, swollen, deformed lower arm. An EMT is unable to palpate a radial pulse. What should the EMT do next?

- Apply cold packs to the injury

- Align the arm with gentle traction

- Splint the arm in the position found.

- Ask the patient to try moving their arm

- An 86-year-old patient with terminal brain cancer is disoriented after a fall. The patient reports severe right hip pain. The spouse tells the EMT that the patient has DNR orders and does not want the patient transported. What should the EMT do next?

- Explain the risks of refusal of transport.

- Ask to see the patient’s DNR orders.

- Have the patient sign a refusal form.

- Request law enforcement intervention.

- Law enforcement officers have detained a patient who they believe is drunk. The officers called because the patient has a history of diabetes. An EMT administers oral glucose, and within a minute, the patient becomes unresponsive. What should an EMT do first?

- Perform chest compressions.

- Initiate rapid transport.

- Begin positive pressure ventilations.

- Suction the patient’s airway.

How are test questions (items) created?

The National Registry test development process is a complex integration of multiple teams, organizations, volunteers, and internal staff. The process combines data science, subject matter expertise, and various specialized and complex skills and competencies to create each question. A single test question takes approximately one year to create and costs roughly $1,800 to develop. The item development process is uniform across all National EMS Certifications.

Volunteers from the EMS community write test questions based on the test plan. Volunteers then submit the questions to the National Registry. The Examination team then performs several rounds of internal review where test questions are referenced and reviewed for clinical accuracy, grammar, and style. Next, a committee of external subject matter experts reviews each item for accuracy, correctness, relevance, and currency. Test questions are then reviewed again by internal staff for any final referencing needs or grammatical issues. The entire review process can take six months or longer from start to finish. The process ensures that:

- Every question is referenced to a task in the practice analysis.

- Each correct answer is correct, current, and accurate.

- Incorrect options are plausible and not partially correct.

- Commonly available EMS textbooks contain each answer.

Controversial questions are discarded or revised before piloting. The psychometrics team performs a reading analysis and evaluates each item for evidence of bias related to race, gender, or ethnicity.

All items are pilot tested. All candidates receive piloted items during their examinations. Piloted items are indistinguishable from scored items but do not count towards a candidate's score. The psychometrics team, experts in testing, collect this data and perform an item analysis after piloting. Psychometricians convert functioning and psychometrically sound items to scored test questions.

The National Registry reviews each test question continuously once it passes piloting for changes in performance. Any test question that drifts in performance is removed from the live examination, reviewed, revised, and repiloted.

The National Registry provides candidates who fail to meet entry-level competency information regarding their testing experience. Studying examination items to prepare is not helpful. Studying the tasks, knowledge, skills, and abilities required to practice provides the best preparation.

Pearson VUE: The National Registry Test Provider

- Pearson VUE Professional Centers (PPCs) are testing centers owned and operated by Pearson and located in most urban areas.

- Pearson VUE Testing Centers (PVTCs) have a contractual relationship with Pearson VUE. These centers are generally located in smaller towns and rural areas. PVTCs are used to increase access to EMS testing in rural areas.

More information about OnVUE examinations can be found here.

The National Registry policy for online proctored examinations can be found here.

In some cases, the closest Pearson VUE test center is located in another state. Candidates can test at any authorized Pearson VUE test center in the United States at a convenient date, time, and location. The examination delivery process is the same regardless of where it is taken.

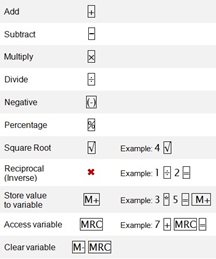

COGNITIVE EXAM ONSCREEN CALCULATOR

HELPFUL INFORMATION ABOUT THE EXAM

All exam items evaluate the candidate's ability to apply knowledge to perform the tasks required of entry-level EMS professionals. Questions answered incorrectly on the exam could mean choosing the wrong assessment or treatment in the field. There are some general concepts to remember about the cognitive exam:

Examination content reflects the National EMS Educational Standards. The National Registry avoids specific details with regional differences, including local and state variances such as protocols. Some topics in EMS are controversial, and experts disagree on the single best approach to some situations. Therefore, the National Registry avoids testing controversial areas.

National Registry exams focus on what providers do in the field. Item writers do not lean on any single textbook or resource. National Registry examinations reflect accepted and current EMS practice. Fortunately, most textbooks are up-to-date and written to a similar standard, but no single source thoroughly prepares a candidate for the examination. Candidates are encouraged to consult multiple references, especially in areas in which they are having difficulty.

A candidate does not need to be an experienced computer user or have typing experience to take the computer-based exam. The National Registry designed the computer testing system for people with minimal computer experience and typing skills. A tutorial is available to each candidate at the testing center before taking the examination.

PREPARING FOR THE EXAM

Here are a few simple suggestions that will help you to perform to the best of your ability on the examination:

- Study your textbook thoroughly and consider using the accompanying workbooks to help you master the material.

- Thoroughly review the current American Heart Association’s Guidelines for Cardiopulmonary Resuscitation and Emergency Cardiovascular Care. You will be tested on this material at the level of the exam you are taking.

- The National Registry does not recommend a particular study guide but recognizes that they can be useful. Study guides may help you identify your weaknesses, but should be used carefully. Some study guides have many easy questions leading some candidates to believe that they are prepared for the exam when more study is warranted. If you choose to use a study guide, we suggest that you do so a few weeks before your actual exam. You can obtain these from your local bookstore or library. Use the score to identify your areas of strength and weakness. Re-read and study your notes and materials for the areas you did not do well in.

- The National Registry is not able to provide candidates information about their specific deficiencies.

The Night Before the Exam

- Do not wait until the night before the exam to begin studying. There will not be enough time to review if you encounter a topic you do not think you know well. This process will only create a stressful situation.

- Get a good night’s sleep

The Day of the Exam

- Eat a well-balanced meal.

- Arrive at the test center at least 30 minutes before the scheduled testing time.

- The identification and examination preparation process takes time.

- A candidate may need this time to review the tutorial on taking a computer based test.

- Arriving early will reduce stress.

- Be sure to have the proper identification as outlined in the confirmation materials before heading to the test center

- A candidate will not be able to take the exam if they do not have the proper form of identification.

- Relax. Thorough preparation and confidence are the best ways to reduce test anxiety

During the Exam

Take time to read each question carefully. The National Registry constructed its examinations to allow plenty of time to finish. Most successful candidates spend about 30 – 60 seconds per item reading each question carefully and thinking it through.

- Fewer than 1% of candidates are unable to finish the exam. Thus, the risk of misreading a question is far greater than your risk of running out of time.

- Do not get frustrated. Everyone will think the examination is difficult because of the adaptive nature of the CAT examination. The CAT algorithm adjusts the examination candidate’s maximum ability level, so a candidate may feel that all items are difficult. Instead, focus on one question at a time, do the best on that question and move on.

After The Exam

- Examination results are not released at the test center or over the telephone.

- Examination results will be posted to a candidate’s National Registry account within two business days following the completion of the examination, provided the candidate has met all other registration requirements.

- Candidates should log into their account and click on “Dashboard” or “My Application > Application Status” to view examination results